{-# LANGUAGE TypeOperators #-}

{-# LANGUAGE FlexibleInstances #-}

{-# LANGUAGE RankNTypes #-}

{-# LANGUAGE GADTs #-}

{-# LANGUAGE KindSignatures #-}

{-# LANGUAGE DataKinds #-}

{-# LANGUAGE FunctionalDependencies #-}

{-# LANGUAGE StandaloneDeriving #-}

{-# OPTIONS -W #-}import Control.Monad

import Control.Monad.StateCompiling with Op Trees

Abstract

Definitional interpreters are an approach to specifying programming languages in a concise and executable manner that is easy to read and maintain. However, interpreted language run times are typically orders of magnitude slower than compiled language run times. On the other hand, compilers are typically more challenging to develop than interpreters are, because they typically do multiple transformations on intermediate representations (IRs).

In this blog post we explore how to bridge the gap between interpreters and compilers by using op trees as a compiler IR. Op trees are a generic data structure for representing monadic programs as syntax, such that we can transform them. We illustrate the approach by building a small compiler that compiles a language with arithmetic, if-then-else expressions, and stateful references into a register-based machine language with conditional jumps.

The blog post is literate Haskell, and the contents of the blog post is based on joint work with Andrew Tolmach and Eelco Visser.

Contents

Introduction

There are many approaches to implementing and specifying new programming languages. Important and frequently-used approaches include writing an interpreter, writing a compiler, or giving a mathematical definition. Each approach has its merits:

- Interpreters are comparatively easy to develop, making it easy to experiment with a language design.

- Compilers can yield more efficient language run times than interpreters, but they are comparatively hard to develop.

- Mathematical definitions allow formal verification of meta-theoretical properties of the language and/or verifying programs written in the language.

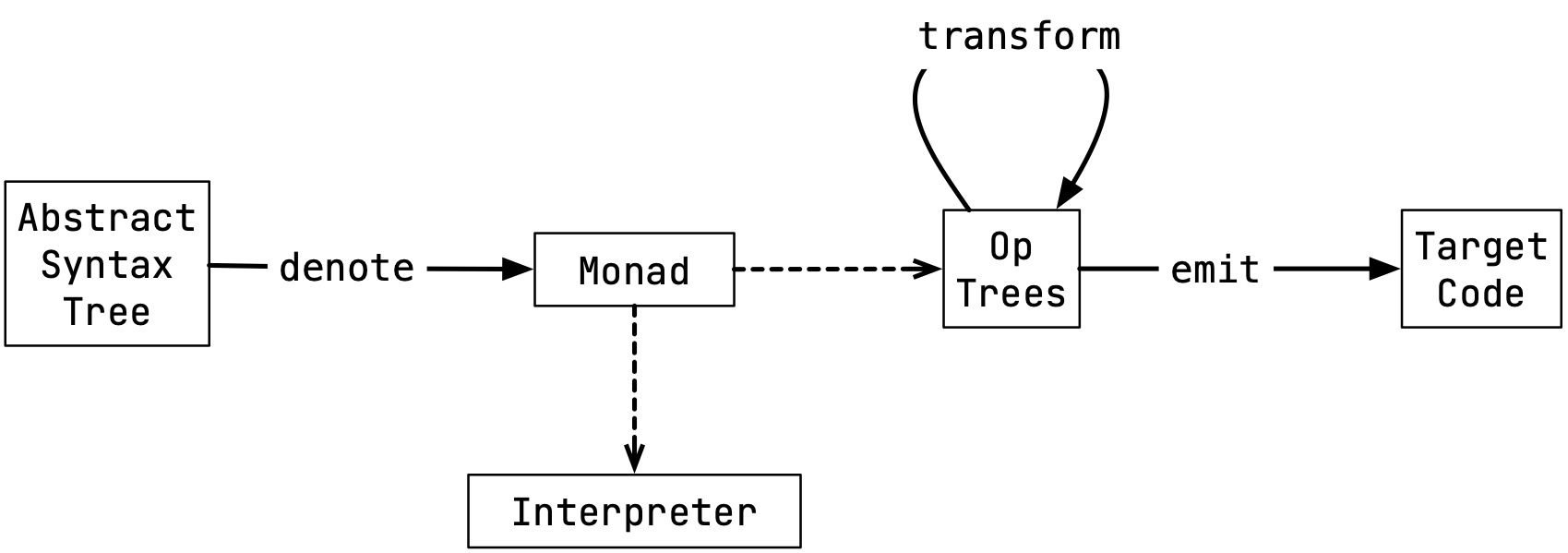

In this blog post we show how to do all three at once, by defining our language in terms of op trees. The blog post focuses on how op trees can be used for compilation; but op trees are instances of the widely-used free monad (see, e.g., the “free” package, or one of the many other implementations available on Hackage of the free monad), for which other blog posts describe how they can be used to implement interpreters, and what the mathematical foundations of the free monad are. We will be defining and implementing a language via the following pipeline:1

This pipeline, in words:

- We first map abstract syntax trees to a monadic semantics via a

denotefunction. Thedenotefunction is a definitional interpreter. - The operations of the monad used in our definitional interpreter is given by a type class that defines the API of the meaning-giving constructs in our interpreter. We give two instances of this type class: (a) as an interpreter (the lower dotted line in the pipeline above), and (b) as an op tree compiler (the other dotted line).

- We define transformations to work on op trees, via a

transformfunction. - After we have applied the necessary transformations, target code is

emitted.

The rest of the blog post describes this pipeline.

We assume familiarity with Haskell and (some of) its features for lightweight dependent types.

A Definitional Interpreter for an Expression Language

We consider a simple language with arithmetic expressions (Num, Plus, and Sub), less-than-equals expressions (Lte), if-then-else expressions (Ite), and stateful reference expressions (MkRef, Asgn, and Deref).

Its abstract syntax is given by the following data type:

data Expr = Num Int

| Plus Expr Expr

| Sub Expr Expr

| Lte Expr Expr

| Ite Expr Expr Expr

| MkRef Expr

| Asgn Expr Expr

| Deref ExprWe shall implement a definitional interpreter for our language that maps each expression to a monadic computation, for some notion of monad that provides the operations captured in the three APIs below for arithmetic, less-than-equals and if-then-else, and stateful references:

class ArithmeticAPI m v where

num :: Int -> m v

plus :: v -> v -> m v

sub :: v -> v -> m v

class LteIteAPI m v where

lte :: v -> v -> m v

ite :: v -> m v -> m v -> m v

class StateAPI m v where

mkref :: v -> m v

asgn :: v -> v -> m v

deref :: v -> m vThese APIs define a language run time that has a means of creating and adding numbers (num and plus), comparing them (lte), branching on a boolean value (ite), and creating, assigning, and dereferencing a value (mkref, asgn, and deref).

The APIs are parametric in both the notion of monad (m) and notion of value (v).

Our definitional interpreter is given by the denote function:

denote :: (Monad m, ArithmeticAPI m v,

LteIteAPI m v, StateAPI m v) => Expr -> m v

denote (Num i) = num i

denote (Plus e1 e2) = do

v1 <- denote e1

v2 <- denote e2

plus v1 v2

denote (Sub e1 e2) = do

v1 <- denote e1

v2 <- denote e2

sub v1 v2

denote (Lte e1 e2) = do

v1 <- denote e1

v2 <- denote e2

lte v1 v2

denote (Ite e e1 e2) = do

v <- denote e

ite v (denote e1) (denote e2)

denote (MkRef e) = do

v <- denote e

mkref v

denote (Asgn e1 e2) = do

v1 <- denote e1

v2 <- denote e2

asgn v1 v2

denote (Deref e) = do

v <- denote e

deref vAt this point, readers familiar with Haskell and monads can probably see how the API can be instantiated to yield an interpreter. It is less obvious how to instantiate the APIs to yield a compiler. We leave the interpreter instance of the APIs as an exercise for the reader, and focus on the problem of instantiating the APIs to yield a compiler. First, let us consider the (imaginary) target language for our compiler.

A Target Register Machine and Its Instruction Set

The register machine we consider is going to have an instruction set corresponding to the type class TargetAPI:

class TargetAPI reg lbl instr | instr -> reg, instr -> lbl where

iload :: Int -> reg -> instr -> instr

iadd :: reg -> reg -> reg -> instr -> instr

isub :: reg -> reg -> reg -> instr -> instr

lbl :: lbl -> instr -> instr

jmp :: lbl -> instr -> instr

jmpltez :: reg -> lbl -> instr -> instr

mov :: reg -> reg -> instr -> instr

done :: reg -> instrThe type class is parametric in the type of registers (reg), the type of labels (lbl), and the type of instructions instr.

The instructions are as follows:

iload i r xsloads an integeriinto registerr, and proceeds with the remaining instructionsxs.iadd r1 r2 r3 xsadds two integer values in registersr1andr2, stores the result in registerr3, and proceeds with the remaining instructionsxs.isub r1 r2 r3 xssubtracts two integer values, akin toiadd.lbl l xslabels the instructions fromxsonwards, and proceeds with the remaining instructionsxs.jmp lrepresents an unconditional jump tol.jmpltez r l xsjumps to the labellifris less than or equal to zero, or proceeds with the remaining instructionsxsotherwise.mov r1 r2 xsmoves the value stored inr1intor2and proceeds with the remaining instructionsxs.done rindicates that there are no remaining instructions, and indicates that a return value can be found in registerr.

(Note that the target language does not have any heap, so references will be modeled as registers.)

Our objective is to define a compiler from ArithmeticAPI, LteIteAPI, and StateAPI to TargetAPI; i.e., for suitable notions of values, registers, and labels:

compileI :: (ArithmeticAPI m v, LteIteAPI m v, StateAPI m v,

TargetAPI reg lbl instr) => m v -> instrThis compile function has a monad in its domain.

But how do we pattern match on monads?

Op trees are the answer to this question.

Op Trees

In a nut shell, op trees are syntactic representations of monadic computations. To define the shape of the monadic computations that an op tree can contain, op trees are parametric in a notion of signature functor. Signature functors describe the type of any monadic operation that matches the pattern given by the following type signature:

monadOp :: v1 -> .. -> vn -> (a1 -> m w1) -> ... -> (am -> m wm) -> m uThat is, signature functors comprise the following information about the syntactic shape of a monadic operation:

- The operation name and its value parameters (

v1 ... vn); - The parameter types (

a1 ... am) and return types (w1 ... wm) of sub-computations; and - The return type of the operation (

u)

This information is captured by the following OpSig type, the type of signature functors:

type OpSig = [(* , *)] -> -- subs

* -> -- ret

*For any sig :: OpSig, the application sig subs ret defines the signature of a monadic operation, where ret is the return type of the operation, and subs is a list of pairs of a parameter type and a return type.

The pairs in this list describe the shape of each monadic sub-computation ((a1 -> m w1) -> ... (a2 -> m w2)).

The following Subs data type defines a list of monadic sub-computations whose shape is given by such a list of pairs subs :: [(* , *)]:

infixr 7 :::data Subs m (subs :: [(* , *)]) where

Nil :: Subs m '[]

(:::) :: (a -> m w) -> Subs m subs -> Subs m ('(a, w) ': subs)Now, an op tree is but a sequence of monadic computations given by some signature sig :: OpSig:

data OpTree (sig :: OpSig) a where

Leaf :: a ->

OpTree sig a

Node :: sig subs ret ->

Subs (OpTree sig) subs ->

(ret -> OpTree sig a) ->

OpTree sig aThe Leaf constructor is a syntactic representation of a monadic computation that returns a value.

The Node constructor is a syntactic representation of a monadic computation (do v <- monadOp x1 ... xn c1 ... cn; k v) :: m a where:

monadOp :: v1 -> .. -> vn -> (a1 -> m w1) -> ... -> (am -> m wm) -> m u

xi :: vi -- i ∈ 1 ... n

ci :: ai -> m wi -- i ∈ 1 ... m

k :: u -> m aConcatenating Op Trees

Op trees are functorial, and concatenatable. Uncoincidentally, the type of the concatenation operation for op trees coincides with monadic bind.

instance Functor (OpTree sig) where

fmap f (Leaf x) = Leaf (f x)

fmap f (Node op subs k) = Node op subs (fmap f . k)instance Applicative (OpTree sig) where

pure = Leaf

(<*>) = apinstance Monad (OpTree sig) where

Leaf x >>= g = g x

Node op subs k >>= g =

Node op subs (\ x -> k x >>= g)Crucially, concatenation (monadic bind) does not distribute over subs; only over continuations k.

This distinguishing feature of op trees is essential for the purpose of using op trees as an intermediate representation in a compiler.

If op tree concatenation would concatenate into subs, we would obtain a compiler that duplicates code unnecessarily.

(We invite the reader to read the rest of this blog post, and revisit this statement after seeing how op trees are used for compilation.)

Op Trees are Instances of the Free Monad

Op trees are an instance of the widely-used notion of free monad. If you are not familiar with the free monad, you may wish to skip to the next section of the blog post.

The standard free monad is given by the following type:

data Free f a = Pure a

| Impure (f (Free f a))If f is functorial, then Free f is functorial and monadic:

instance Functor f => Functor (Free f) where

fmap f (Pure a) = Pure (f a)

fmap f (Impure g) = Impure (fmap (fmap f) g)instance Functor f => Applicative (Free f) where

pure = Pure

(<*>) = apinstance Functor f => Monad (Free f) where

return = Pure

Pure x >>= g = g x

Impure f >>= g = Impure (fmap (\ k' -> k' >>= g) f)To see how Tree is an instance of Free, consider the following J functor:

data J sig a where

J :: sig subs ret ->

Subs (Free (J sig)) subs ->

(ret -> a) ->

J sig a

instance Functor (J sig) where

fmap f (J op subs k) = J op subs (f . k)Using J:

type OpTree' sig a = Free (J sig) aIf we unfold the definition of J in the data type Free (J sig) a, and if we unfold the definition of fmap in the monadic bind of Monad (Free (J sig)), we see that OpTree sig a and OpTree' sig a are, in fact, equivalent.

Why “Op Trees”? (A Bit of Historical Context)

Op trees borrow their naming from Hancock and Setzer’s I/O trees for defining and reasoning about interactive systems by encoding them in dependent type theory (Hancock and Setzer 2000). I/O trees are a little simpler than op trees, insofar as the encoding of I/O trees that Hancock and Setzer gave only supports monadic operations without monadic sub-computations; i.e., monadic computations that have the form:

monadOp :: v1 -> ... -> vn -> m uThe goal of Hancock and Setzer was to provide a model for Haskell’s I/O computations which, at the time, did not include operations with monadic sub-computations. This blog post pursues a different goal.

Operation Signatures for Our APIs

We define operation signatures for the monadic operations in MonadAPI.

We do so using three distinct signatures, for each of the three classes of constructs that our language contains: ArithOp defines the signature of the monadic operations in arithmetic operations; LteIteOp defines the signature of less-than-equals and if-then-else expressions; and StateOp defines the signature of stateful reference operations.

Each signature is parametric in a notion of value v.

data ArithOp v :: OpSig where

NumOp :: Int -> ArithOp v '[] v

PlusOp :: v -> v -> ArithOp v '[] v

SubOp :: v -> v -> ArithOp v '[] vdata LteIteOp v :: OpSig where

LteOp :: v -> v -> LteIteOp v '[] v

IteOp :: v -> LteIteOp v '[ '((), v), '((), v) ] vdata StateOp v :: OpSig where

MkRefOp :: v -> StateOp v '[] v

AsgnOp :: v -> v -> StateOp v '[] v

DerefOp :: v -> StateOp v '[] vThese signatures can be combined using the signature sum type:

infixr 7 :+:data (:+:) (sig1 :: OpSig) (sig2 :: OpSig) :: OpSig where

Inj1 :: sig1 subs ret -> (sig1 :+: sig2) subs ret

Inj2 :: sig2 subs ret -> (sig1 :+: sig2) subs retThe monadic operations in MonadAPI are thus given by:

type MonadAPISig v = ArithOp v :+: LteIteOp v :+: StateOp vIn fact, OpTree (MonadAPISig v) is an instance of our monad APIs:

instance ArithmeticAPI (OpTree (MonadAPISig v)) v where -- elided num i =

Node (Inj1 (NumOp i)) Nil Leaf

plus v1 v2 =

Node (Inj1 (PlusOp v1 v2)) Nil Leaf

sub v1 v2 =

Node (Inj1 (SubOp v1 v2)) Nil Leafinstance LteIteAPI (OpTree (MonadAPISig v)) v where -- elided lte v1 v2 =

Node (Inj2 (Inj1 (LteOp v1 v2))) Nil Leaf

ite v m1 m2 =

Node (Inj2 (Inj1 (IteOp v))) (const m1 ::: const m2 ::: Nil) Leafinstance StateAPI (OpTree (MonadAPISig v)) v where -- elided mkref v =

Node (Inj2 (Inj2 (MkRefOp v))) Nil Leaf

asgn v1 v2 =

Node (Inj2 (Inj2 (AsgnOp v1 v2))) Nil Leaf

deref v =

Node (Inj2 (Inj2 (DerefOp v))) Nil LeafIn other words, the signatures we have just given and the op tree concatenation operation we gave earlier, provides an op code compiler:

opcomp :: Expr -> OpTree (MonadAPISig v) v

opcomp = denoteWe could go the standard route of free monads and provide handlers for the signature functor operations, to build an interpreter with modular effects following, e.g., (Swierstra 2008). In this blog post we consider how op trees provide a handle on not just effects, but also compilation.

Compiling If-Then-Else Operations

We introduce a signature for labeled jumps, akin to the jmp and jumpltez instructions of our target machine.

The operation signatures correspond to the three monadic operations in the following type class:

class LabelJumpLtezAPI m l v | m -> l, m -> v where

label :: (l -> m v) -> m v

jump :: l -> m v

jumpltez :: v -> l -> m vThe semantics of these operations is intended to correspond to the instructions of the target machine:

label flabels its continuation. That is, in a computationdo v <- label f; k, the label thatftakes as argument refers tok.jump ljumps to the labell.jumpltez v lchecks ifvis less-than or equal to zero and jumps tolif it is.

The signature of these operations is:

data LabelJumpLtezOp l v :: OpSig where

LabelOp :: LabelJumpLtezOp l v '[ '(l , v) ] v

JumpOp :: l -> LabelJumpLtezOp l v '[] v

JumpLtezOp :: v -> l -> LabelJumpLtezOp l v '[] vWe shall compile op trees with LteIteOp operations into op trees with LabelJumpLtezOp operations instead.

For convenience, we first define a type alias for our notion of target op trees and show that such trees are an instance of the LabelJumpLtezAPI:

type TargetAPISig l v = ArithOp v :+: LabelJumpLtezOp l v :+: StateOp vinstance LabelJumpLtezAPI (OpTree (TargetAPISig l v)) l v where -- elided label m = Node (Inj2 (Inj1 LabelOp)) (m ::: Nil) Leaf

jump l = Node (Inj2 (Inj1 (JumpOp l))) Nil Leaf

jumpltez v l = Node (Inj2 (Inj1 (JumpLtezOp v l))) Nil LeafNote that TargetAPISig also satisfies the ArithmeticAPI and StateAPI classes:

instance ArithmeticAPI (OpTree (TargetAPISig l v)) v where -- elided num i =

Node (Inj1 (NumOp i)) Nil Leaf

plus v1 v2 =

Node (Inj1 (PlusOp v1 v2)) Nil Leaf

sub v1 v2 =

Node (Inj1 (SubOp v1 v2)) Nil Leafinstance StateAPI (OpTree (TargetAPISig l v)) v where -- elided mkref v =

Node (Inj2 (Inj2 (MkRefOp v))) Nil Leaf

asgn v1 v2 =

Node (Inj2 (Inj2 (AsgnOp v1 v2))) Nil Leaf

deref v =

Node (Inj2 (Inj2 (DerefOp v))) Nil LeafWe now define a function transform that compiles the computations given by the signature LteIteOp into LabelJumpLtezOp:

transform :: OpTree (MonadAPISig v) a -> OpTree (TargetAPISig l v) a

transform (Leaf x) = Leaf x

transform (Node (Inj2 (Inj1 (IteOp v))) (k1 ::: k2 ::: Nil) k) = do

vz <- num 0

vt <- mkref vz

label (\ ljoin -> do

label (\ lthen -> do

jumpltez v lthen

v <- transform (k2 ())

asgn vt v

jump ljoin)

v <- transform (k1 ())

asgn vt v)

v <- deref vt

transform (k v)

transform (Node (Inj2 (Inj1 (LteOp v1 v2))) Nil k) = do

v <- sub v1 v2

transform (k v)

transform (Node (Inj1 s) subs k) = -- op unchanged

Node (Inj1 s) (mapSubs transform subs) (transform . k)

transform (Node (Inj2 (Inj2 s)) subs k) = -- op unchanged

Node (Inj2 (Inj2 s)) (mapSubs transform subs) (transform . k)Here:

mapSubs :: (forall a. f a -> g a) ->

Subs f subs -> Subs g subs -- elidedmapSubs _ Nil = Nil

mapSubs f (k ::: subs) = (f . k) ::: mapSubs f subsThe only two interesting cases of transform are the cases for if-then-else operations (IteOp) and less-than-equals operations (LteOp), which describe how if-then-else expressions are translated into labeled conditional jumps, and how less-than-equals expressions are translated into an integer subtraction operation, with the understanding that numbers less than or equal to zero represent Boolean truth.

We are now ready to emit target code from our op trees of type OpTree (TargetAPISig l v) a. Before doing so, let us consider:

What Did Op Trees Buy Us?

Op trees let us apply transformations that made explicit at the type level what the transformation that we applied was: the transformation is summarized as the difference between the signatures before and after transformation:

ArithOp v :+: LteIteOp v :+: StateOp vand

ArithOp v :+: LabelJumpLtezOp l v :+: StateOp vOp trees capture the structure of the operations that make up the definitional interpreter itself, and uses this as the basis for semantics-directed compilation.

Emitting Code

We emit code that matches the following data type, corresponding to the TargetAPI type class:

data Instr = ILoad Int Reg Instr

| IAdd Reg Reg Reg Instr

| ISub Reg Reg Reg Instr

| Lbl Label Instr

| Jmp Label Instr

| JmpLtez Reg Label Instr

| Mov Reg Reg Instr

| Done RegHere:

type Reg = String

type Label = StringWe also introduce a notion of concatenation for instructions. Our compiler will make use of this:

concatI :: Instr -> (Reg -> Instr) -> Instr -- elidedconcatI (ILoad i r c) k = ILoad i r (concatI c k)

concatI (IAdd r1 r2 r c) k = IAdd r1 r2 r (concatI c k)

concatI (ISub r1 r2 r c) k = ISub r1 r2 r (concatI c k)

concatI (Lbl l c) k = Lbl l (concatI c k)

concatI (Jmp l c) k = Jmp l (concatI c k)

concatI (JmpLtez r l c) k = JmpLtez r l (concatI c k)

concatI (Mov r1 r2 c) k = Mov r1 r2 (concatI c k)

concatI (Done r) k = k rWe introduce a monad Gen for generating fresh register names and fresh label names (a counter for each):

type Gen = State (Int, Int)Here, State refers to a standard state monad.

fresh generates a fresh register name:

freshr :: Gen Reg

freshr = do

(i, l) <- get

put (i + 1, l)

return ("r" ++ show i)

freshl :: Gen Label

freshl = do

(i, l) <- get

put (i, l + 1)

return ("l" ++ show l)instantiate k uses fresh to generate a new register name, and passes that name to k:

instantiate :: (Reg -> Gen b) -> Gen (Reg, b)

instantiate k = do

r <- freshr

k' <- k r

return (r, k')Using fresh and instantiate, we define a compileI function from op trees to instructions, where commands are parameterized by register names rather than values (Cmd Reg):

compileI :: OpTree (TargetAPISig Label Reg) Reg -> Gen Instr

compileI (Leaf x) = return (Done x)

compileI (Node (Inj1 (NumOp i)) Nil k) = do

(r, c) <- instantiate (compileI . k)

return (ILoad i r c)

compileI (Node (Inj1 (PlusOp r1 r2)) Nil k) = do

(r, c) <- instantiate (compileI . k)

return (IAdd r1 r2 r c)

compileI (Node (Inj1 (SubOp r1 r2)) Nil k) = do

(r, c) <- instantiate (compileI . k)

return (ISub r1 r2 r c)

compileI (Node (Inj2 (Inj1 LabelOp)) (k1 ::: Nil) k) = do

l <- freshl

c1 <- compileI (k1 l)

(_, c) <- instantiate (compileI . k)

return (concatI c1 (const (Lbl l c)))

compileI (Node (Inj2 (Inj1 (JumpOp l))) Nil k) = do

(_, c) <- instantiate (compileI . k)

return (Jmp l c)

compileI (Node (Inj2 (Inj1 (JumpLtezOp r l))) Nil k) = do

(_, c) <- instantiate (compileI . k)

return (JmpLtez r l c)

compileI (Node (Inj2 (Inj2 (MkRefOp r1))) Nil k) = do

(r, c) <- instantiate (compileI . k)

return (Mov r1 r c)

compileI (Node (Inj2 (Inj2 (AsgnOp r1 r2))) Nil k) = do

(_, c) <- instantiate (compileI . k)

return (Mov r2 r1 c)

compileI (Node (Inj2 (Inj2 (DerefOp r1))) Nil k) = do

(r, c) <- instantiate (compileI . k)

return (Mov r1 r c)Now:

comp :: Expr -> Instr

comp = fst .

flip runState (0, 0) .

compileI .

transform .

denoteinstance Show Instr where

show (ILoad i r c) =

"iload " ++ show i ++ " " ++ r ++ "\n" ++ show c

show (IAdd r1 r2 r c) =

"iadd " ++ r1 ++ " " ++ r2 ++ " " ++ r ++ "\n" ++ show c

show (ISub r1 r2 r c) =

"isub " ++ r1 ++ " " ++ r2 ++ " " ++ r ++ "\n" ++ show c

show (Lbl l c) =

l ++ ":\n" ++ show c

show (Jmp l c) =

"jmp " ++ l ++ "\n" ++ show c

show (JmpLtez r l c) =

"jmpltez " ++ r ++ " " ++ l ++ "\n" ++ show c

show (Mov r1 r2 c) =

"mov " ++ r1 ++ " " ++ r2 ++ "\n" ++ show c

show (Done r) =

"done " ++ rEt voilà:

λ> comp (Ite (Lte (Num 0) (Num 1)) (Num 42) (Num 1337))

iload 0 r0

iload 1 r1

isub r0 r1 r2

iload 0 r3

mov r3 r4

jmpltez r2 l1

iload 1337 r6

mov r6 r4

jmp l0

l1:

iload 42 r10

mov r10 r4

l0:

mov r4 r13

done r13Beyond Arithmetic Expressions

Since writing this blog post, Bernard Bot has written his MSc thesis on using op trees as an IR for a CPS-based compiler.

We have recently coauthored a paper (submitted for review) together with Andrew Tolmach and Eelco Visser that builds on his work.

The paper also shows how OpTrees lets us write compiler transformations that avoid boilerplate code.

The paper uses a slightly different notion of OpTree from what this blog post shows, but the basic idea is the same.

Conclusion

We have presented op trees, and illustrated how they can be used for semantics-directed transformation and compilation of monadic programs.

Acknowledgements

Thanks to Arjen Rouvoet and Bernard Bot for feedback on an earlier version on this blog post.

References

Language definitions typically also define syntax and static semantics (e.g., type checking). In this post, we focus on dynamic semantics.↩︎